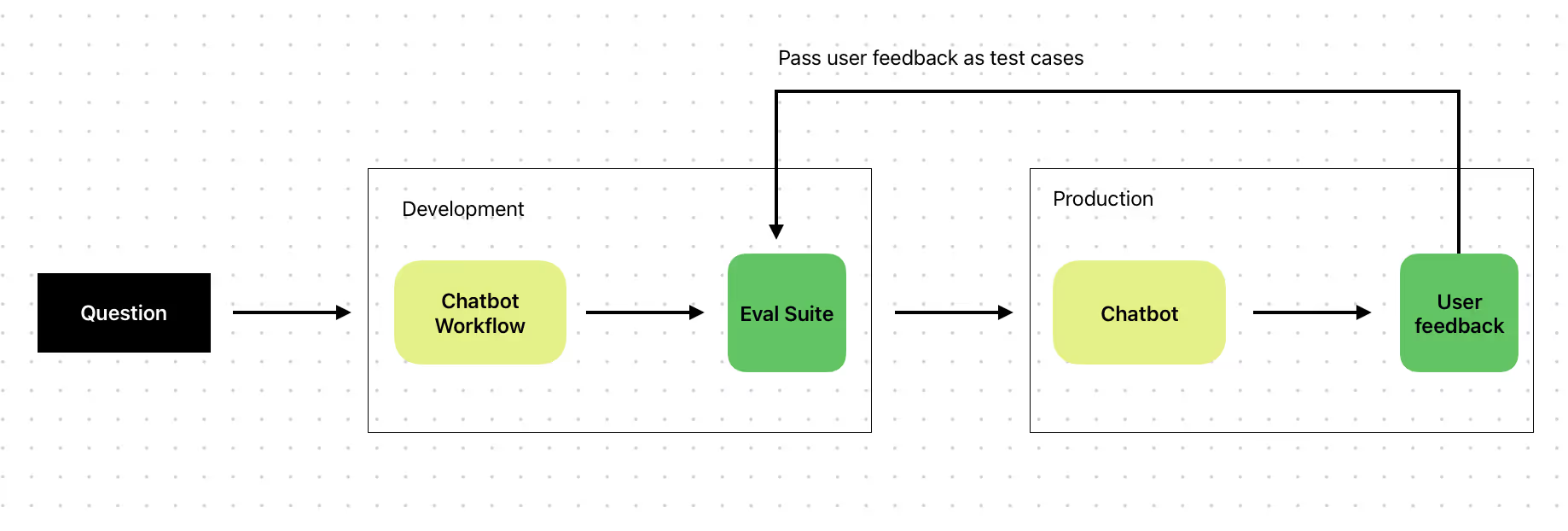

We’re excited to introduce a powerful new feature that allows you to capture end-user feedback on your AI system and use it as ground truth for your test cases. This gives you the ability to continuously refine your AI outputs, improving accuracy and ensuring your system delivers more reliable results over time.

Here’s how it works.

Capture feedback as test cases

magine you have a RAG chatbot that answers questions about your product's trust center and privacy policies. You've already set up a test suite to evaluate the chatbot’s performance across several important metrics, such as semantic similarity. This dimension measures how closely the chatbot’s responses align with the correct answers.

Once your system is live, you can collect end-user feedback and label it as actuals—either from users directly or from internal labeling data. These actuals represent what the correct response should have been, based on real-world interactions.

How it works

In Vellum you can easily flag the incorrect response, mark it as a test case example and add it in your evaluation suite. Here’s a quick demo on how that works:

With the new test case saved, you can go to your evaluation set and run the evaluation again to see how closely the chatbot’s output matches the updated ground truth.

If needed, you can tweak your prompts or workflows and rerun the evaluation until the system’s output aligns closely with the expected response. This process helps improve accuracy and ensures that the chatbot continuously gets closer to the correct answers.

Why This Matters

By incorporating end-user feedback into your testing cycle, you're creating a continuous improvement loop for your AI system.

This allows for faster iteration, more accurate outputs, and an overall improvement in AI system performance. Essentially, you're ensuring that your AI stays aligned with real-world expectations, while making it easier to spot and fix issues quickly.

Vellum is designed to support every stage of your AI development cycle — book a call with one of our AI experts to set up your evaluation.

Latest AI news, tips, and techniques

Specific tips for Your AI use cases

No spam

Each issue is packed with valuable resources, tools, and insights that help us stay ahead in AI development. We've discovered strategies and frameworks that boosted our efficiency by 30%, making it a must-read for anyone in the field.

This is just a great newsletter. The content is so helpful, even when I’m busy I read them.

Experiment, Evaluate, Deploy, Repeat.

AI development doesn’t end once you've defined your system. Learn how Vellum helps you manage the entire AI development lifecycle.

.png)