Debugging AI workflows often feels like chasing shadows, especially when trying to verify that your LLM is giving the right answers in every possible scenario. At Vellum, we know this challenge all too well, and that’s why we’ve introduced a solution to simplify this process: synthetic test case generation.

Here’s the gist:

When you’re building AI systems, you need test cases that simulate real user interactions.

For instance, we created a chatbot designed to answer questions about our company’s security policies. A user might ask, “What encryption does Acme Company use for my OpenAI key?” The chatbot should respond with the correct details—say, "AES-256 encryption per our trust center policy."

But generating enough test cases to cover edge cases, obscure questions, and everything in between is time-consuming.

That’s where synthetic test case generation steps in.

Synthetic test case generation in Vellum

To generate synthetic test cases in Vellum we can define an Evaluation suite and run a custom Workflow that we designed for this purpose. Here’s a walkthrough on how we were able to create synthetic test cases for our “Trust Cener” Q&A bot:

Generating a Test Case Suite

We first start by defining an Evaluation suite in Vellum. We added a metric (e.g., "Ragas - Faithfulness") by naming it, describing its purpose, and mapping the output variable (e.g., "completion") to the target variable:

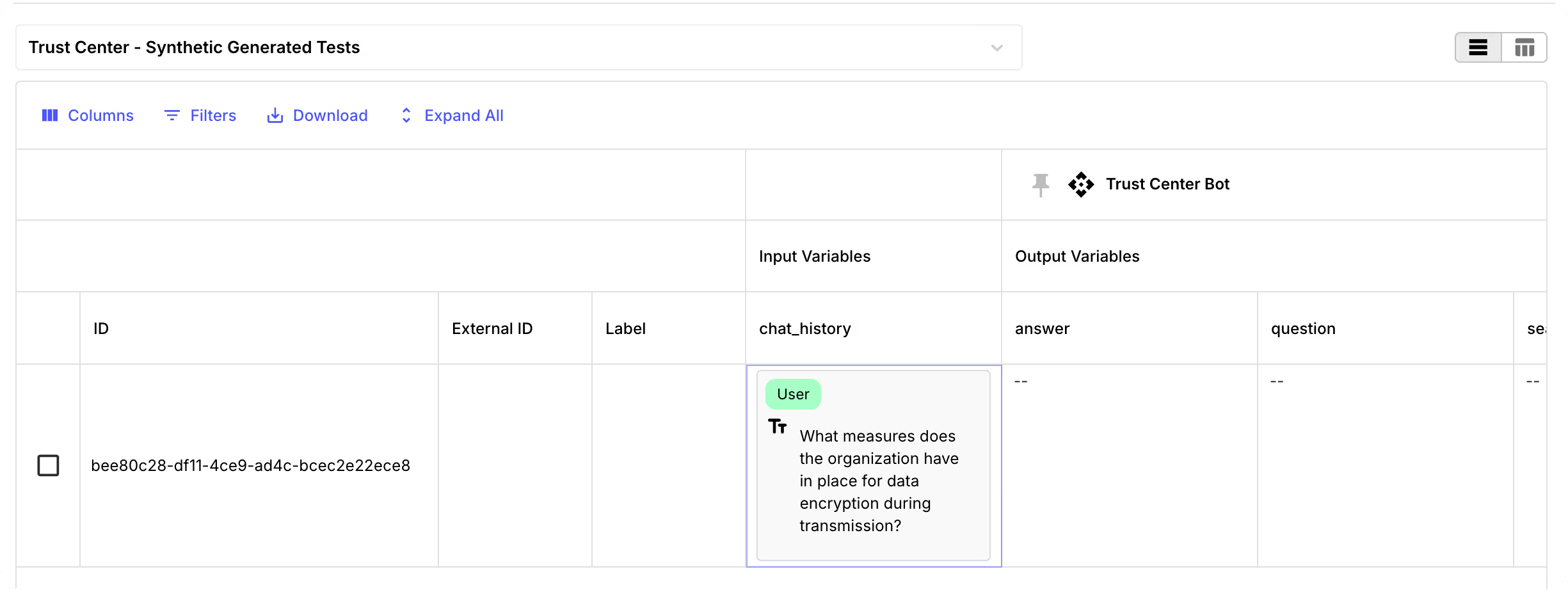

Then, we created one Evaluation Suite example for the LLM to generate more from. In our case, we set up this starter Test Suite:

Creating the Workflow Template

Next, we set out to build a Workflow that could automatically generate test cases for the Evaluation Suite we just configured.

To achieve this, we designed a multi-step Workflow that performs two key tasks:

- Uses LLMs to generate test cases based on defined criteria

- Leverages our API endpoints to bulk upload the newly generated test cases into the specified Evaluation Suite

Here’s the final version of the workflow:

Running the Workflow Template

To run the workflow we provided these three variables:

- Workflow Purpose: We specify what our AI system does

- Test Suite Name: We add our Test Suite name that we generated. In this case we just type:

trust-center-synthetic-generated-tests - Number of test cases: We then add the number of test cases we want to generate

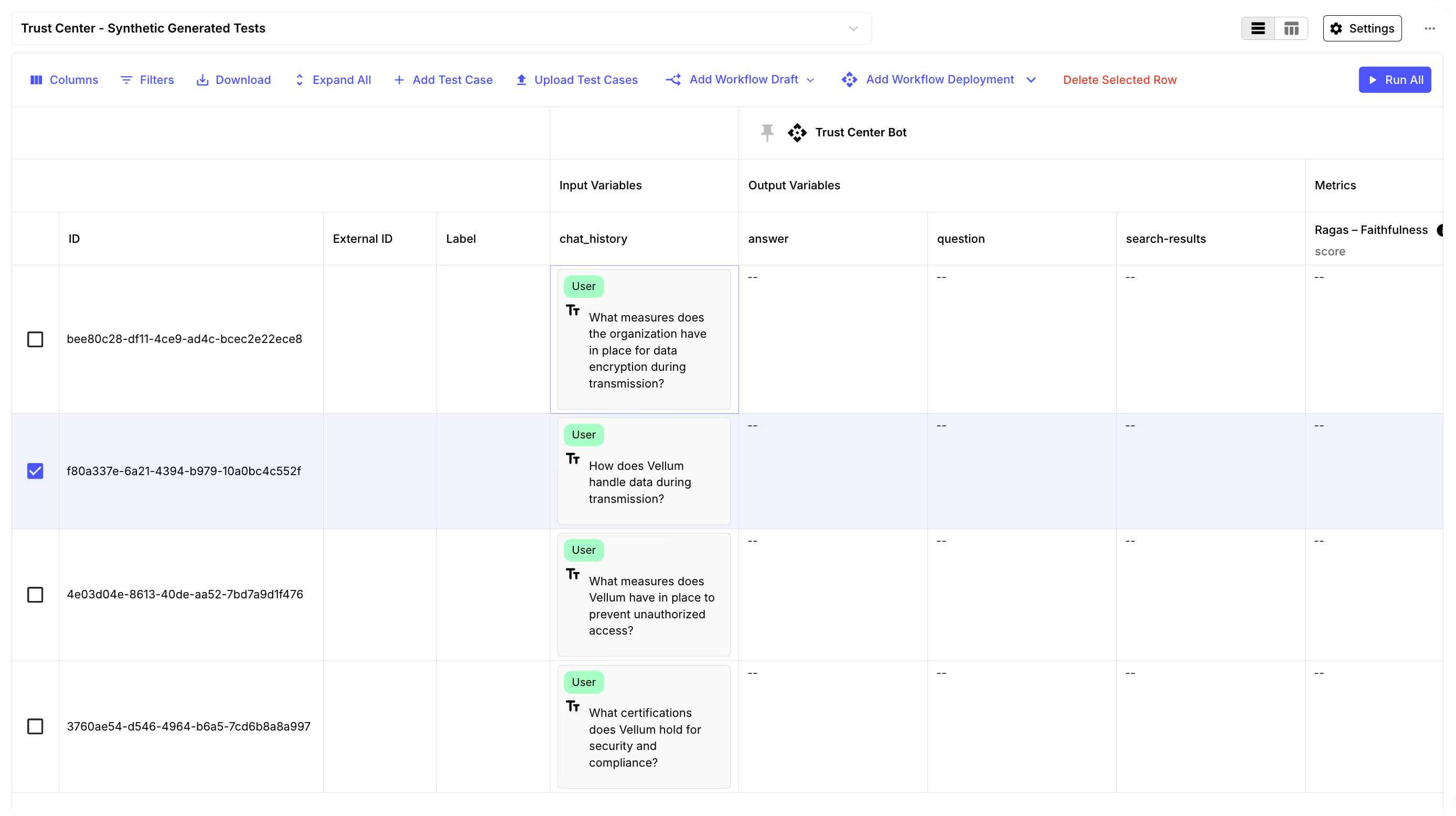

Then, once we ran the Workflow, the newly generated test cases were upserted directly into our Evaluation suite, ready to be tested against our custom metrics like Ragas faithfulness:

Why It Matters

Manually writing test cases is painful and doesn’t scale. Synthetic test case generation saves time, ensures you test a wider range of user interactions and it can adapt as your AI workflows grow in complexity.

Our customers tell us this feature is a game-changer. You’re no longer stuck writing endless test cases by hand or worrying about missing critical scenarios.

Want to try it for yourself? Let us know—we’re here to help!

Latest AI news, tips, and techniques

Specific tips for Your AI use cases

No spam

Each issue is packed with valuable resources, tools, and insights that help us stay ahead in AI development. We've discovered strategies and frameworks that boosted our efficiency by 30%, making it a must-read for anyone in the field.

This is just a great newsletter. The content is so helpful, even when I’m busy I read them.

Experiment, Evaluate, Deploy, Repeat.

AI development doesn’t end once you've defined your system. Learn how Vellum helps you manage the entire AI development lifecycle.

.png)