Learn about the current rate limits and strategies like exponential backoff and caching to help you avoid them.

Let’s begin by discussing how rate limits are enforced, and what tiers exist for different providers.

Rate limit measurements

OpenAI and Azure OpenAI enforce rate limits in slightly different ways, but they both use some combination of the following factors to measure request volume:

- Requests per minute (RPM)

- Requests per day (RPD)

- Tokens per minute (TPM)

- Tokens per day (TPD)

If any of the above thresholds are reached, your limit is hit and your requests may get rate limited.

For example, imagine that your requests per minute is 20 and your tokens per minute is 1000. Now, consider the following scenarios:

- You send 20 requests, each requesting 10 tokens. In this case, you would hit your RPM limit first and your next request would be rate limited.

- You send 1 request, requesting 1000 tokens. In this case, you would hit your TPM limit first and any subsequent requests would be rate limited.

Notably, rate limits can be quantized, where they can be enforced over shorter periods of time via proportional metrics. For example, an RPM of 600 may be enforced in per-second iterations, where no more than 10 requests per second are allowed. This means that short activity bursts may get you rate limited, even if you’re technically operating under the RPM limit!

OpenAI usage tiers

OpenAI assigns rate limits based on the usage tier of your account. As of June 2024:

Each usage tier comes with different rate limits. For example, the lowest paid tier (Tier 1) has the following rate limits:

Meanwhile, the highest usage tier (Tier 5) offers much higher rate limits:

Azure OpenAI rate limits

Microsoft uses similar measurements to enforce rate limits for Azure OpenAI, but the process of assigning those rate limits is different. Azure OpenAI uses a per-account TPM quota, and you can choose to assign portions of that quota to each of your model deployments. For example, say your quota is 150,000 TPM. With that quota, you can choose to have:

- 1 deployment with a TPM of 150,000

- 2 deployments with a TPM of 75,000

- …and so on, as long as the sum of your TPM across all deployments is no more than 150,000.

Additionally, Azure OpenAI assigns RPM proportionally to TPM using the ratio of 6 RPM per 1000 TPM. So, if you have a model with a TPM of 150,000, your RPM for that model will be 900.

Microsoft sets default quotas depending on the region that you’re deploying in. You can find a full reference here, but as of the time of writing, the TPM limits for the most commonly used U.S. regions are:

Rate limit errors can be frustrating, but there are ways to mitigate their effects. If you encounter 429 responses from OpenAI or Azure OpenAI, try these approaches:

Add retries with exponential backoff

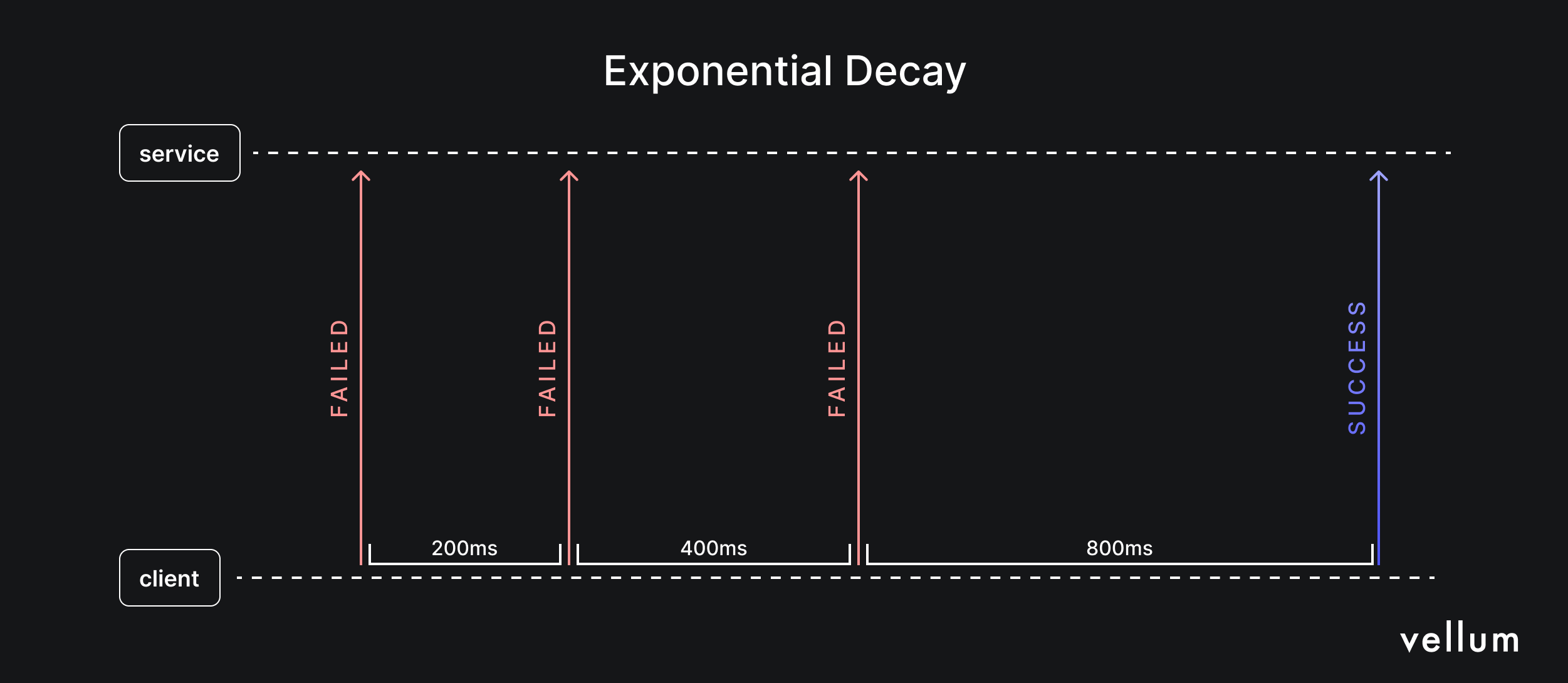

A common way to avoid rate limit errors is to add automatic retries with random exponential backoff. This method involves waiting for a short, random period (aka a “backoff”) after encountering a rate limit error before retrying the request. If the request fails again, the wait time is increased exponentially, and the process is repeated until the request either succeeds or a maximum number of retries is reached.

Here’s an example of how to implement retries with exponential backoff using the popular backoff Python module (alternatively, you can use Tenacity or Backoff-utils):

import openai

import backoff

client = openai.OpenAI()

# Run exponential backoff when we hit a RateLimitError. Wait a maximum of

# 30 seconds and do not retry more than 5 times.

@backoff.on_exception(backoff.expo,

openai.RateLimitError,

max_time=30,

max_tries=5)

def send_prompt_with_backoff(**kwargs):

return client.completions.create(**kwargs)

send_prompt_with_backoff(model="gpt-3.5-turbo", prompt="How does photosynthesis work")This strategy offers several advantages:

- Automatic recovery: Automatic retries help recover from rate limit errors without crashes or data loss. Users may have to wait longer, but intermediate errors are hidden.

- Efficient retries: Exponential backoff allows quick initial retries and longer delays for subsequent retries, maximizing chance of success while minimizing user wait time.

- Randomized delays: Random delays prevent simultaneous retries, avoiding repeated rate limit hits.

Keep in mind that unsuccessful requests still count towards your rate limits for both OpenAI and Azure OpenAI. Evaluate your retry strategy carefully to avoid exceeding rate limits with unsuccessful requests.

Adding Exponential Backoff Logic in Vellum

Below is an interactive preview of a Vellum Workflow implementing exponential backoff logic. If the prompt node encounters an error, the workflow waits for 5 seconds and retries up to 2 times:

Optimize your prompts and token usage

While it’s straightforward to measure your RPM, it can be trickier to measure your TPM. By its simplest definition, a “token” is a segment of a word. When you send a request to an OpenAI API, the input is sliced up into tokens and the response is generated as tokens. Therefore, when thinking about TPM, you need to consider the amount of input and output tokens getting generated.

OpenAI provides a parameter max_tokens that enables you to limit the number of tokens generated in the response. When evaluating your TPM rate limit, OpenAI and Azure OpenAI use the maximum of the input tokens and your max_tokens parameter to determine how many tokens will count towards your TPM. Therefore, if you set your max_tokens too high, you will end up using up more of your TPM per request than necessary. Always set this parameter as close as possible to your expected response size.

Prompt chaining

Additionally, instead of using very long prompts for a task, consider using prompt chaining.

Prompt chaining involves dividing a complex task into more manageable subtasks using shorter, more specific prompts that connect together. Since your token limit includes both your input and output tokens, using shorter prompts is a great way to manage complex tasks without exceeding your token limit.

We wrote more on this strategy in this article.

Use caching to avoid duplicate requests

Caching stores copies of data in a temporary location, known as a cache, to speed up requests for recent or frequently accessed data. It can also store API responses so future requests for the same information use the cache instead of the API.

Caching in LLM applications can be tricky since requests for the same information may look different. For example, How hot is it in London and What is the temperature in London request the same information but would not match in a simple cache.

Semantic caching solves this by using text similarity measures to determine if requests are asking for the same information. This allows different prompts to be pulled from the cache, reducing API requests. Consider semantic caching when your application frequently receives similar requests; you can use libraries like Zilliz’s GPTCache to easily implement it.

Model providers are also recognizing the need for native caching features in complex workflows. Google’s new context caching for Gemini models lets users cache input tokens and reuse them for multiple requests. This is particularly useful for repeated queries on large documents, chatbots with extensive instructions, and recurring analysis of code repositories. While OpenAI and Azure OpenAI don't support this yet, be on the lookout for future caching features to improve token efficiency.

If you’re still finding it hard to stay within your rate limits, your best option may be to contact OpenAI or Microsoft to increase your rate limits. Here’s how you can do that:

- OpenAI: You can review your usage tier by visiting the limits section of your account’s settings. As your usage and spend on the OpenAI API goes up, OpenAI will automatically elevate your account to the next usage tier, which will cause your rate limits to go up.

- Azure OpenAI: You can submit quota increase requests from the Quotas page of Azure OpenAI Studio. Due to high demand, Microsoft is prioritizing requests for customers who fully utilize their current quota allocation. It may be worth waiting for your quota allocation to be hit before submitting a request to increase your quota

If you care more about throughput — i.e. the number of requests and/or the amount of data that can be processed — than latency, also consider implementing these strategies:

Add a delay between requests

Even with retrying with exponential backoff, you may still hit the rate limit during the first few retries. This can result in a significant portion of your request budget being used on failed retries, reducing your overall processing throughput.

To address this, add a delay between your requests. A useful heuristic is to introduce a delay equal to the reciprocal of your RPM. For example, if your rate limit is 60 requests per minute, add a delay of 1 second between requests. This helps maximize the number of requests you can process while staying under your limit.

import openai

import math

import time

client = openai.OpenAI()

REQUESTS_PER_MINUTE = 30

DEFAULT_DELAY = math.ceil(60 / REQUESTS_PER_MINUTE)

def send_request_with_delay(delay_seconds=DEFAULT_DELAY, **kwargs):

time.sleep(delay_seconds) # Delay in seconds

# Call the Completion API and return the result

return client.completions.create(**kwargs)

send_request_with_delay(

model="gpt-3.5-turbo",

prompt="How does photosynthesis work"

)Batch multiple prompts into a single request

If you’re reaching your RPM limit but still have capacity within your TPM limit, you can increase throughput by batching multiple prompts into each request. This method allows you to process more tokens per minute.

Sending a batch of prompts is similar to sending a single prompt, but you provide a list of strings for the prompt parameter instead of a single string. Note that the response objects may not return completions in the same order as the prompts, so be sure to match responses to prompts using the index field.

import openai

client = openai.OpenAI()

def send_batch_request(prompts=[]):

# An example batched request

response = client.completions.create(

model="gpt-3.5-turbo-instruct-0914",

prompt=prompts,

max_tokens=100,

)

# Match completions to prompts by index

completions = [] * len(prompts)

for choice in response.choices:

completions[choice.index] = choice.text

return completions

num_prompts = 10

prompts = ["The small town had a secret that no one dared to speak of: "] * num_prompts

completions = send_batch_request(prompts)

# Print output

print(completions)

Closing Thoughts

Dealing with OpenAI rate limits can be tough.

They can obstruct honest uses of the API even if they were created to prevent abuse. By using tactics like exponential backoff, prompt optimization, prompt chaining, and caching, you can reasonably avoid hitting rate limits.

You can also improve your throughput by effectively using delays and batching requests. Of course, you can also increase your limits by upgrading your OpenAI or Azure tier.

Latest AI news, tips, and techniques

Specific tips for Your AI use cases

No spam

Each issue is packed with valuable resources, tools, and insights that help us stay ahead in AI development. We've discovered strategies and frameworks that boosted our efficiency by 30%, making it a must-read for anyone in the field.

This is just a great newsletter. The content is so helpful, even when I’m busy I read them.

Experiment, Evaluate, Deploy, Repeat.

AI development doesn’t end once you've defined your system. Learn how Vellum helps you manage the entire AI development lifecycle.

.png)