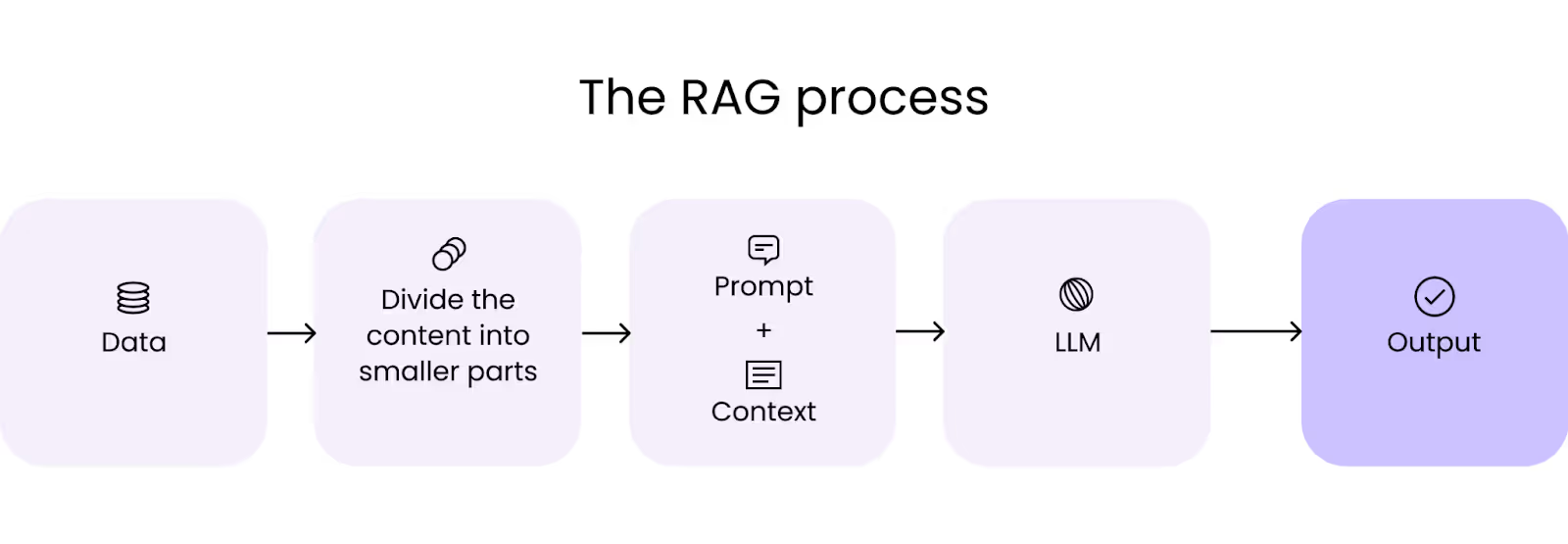

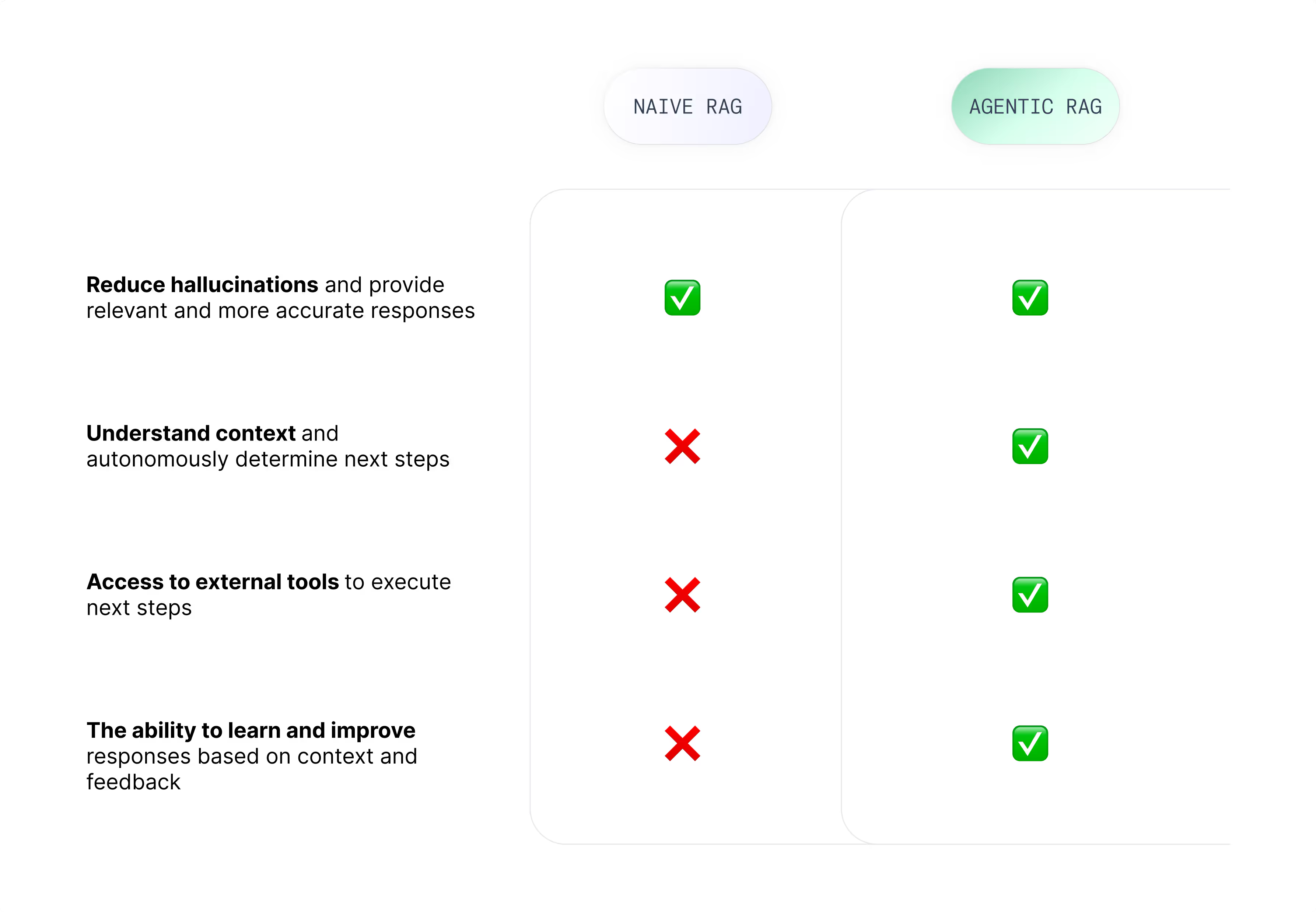

Commonly referred to as RAG, Retrieval-Augmented Generation enhances LLM applications by adding context to prompts. RAG helps reduce hallucinations and produce more relevant outputs.

But what if we could take it a step further?

Enter Agentic RAG—a powerful evolution where AI agents are introduced in the retrieval and generation process. These agents can reason, plan, and utilize external tools, enabling them to dynamically manage retrieval strategies, refine context understanding, and adapt workflows for complex tasks.

Quick overview

Agentic RAG extends traditional Retrieval-Augmented Generation (RAG) by layering in reasoning agents that plan, evaluate, and adapt how retrieval is done. Instead of simply pulling documents and feeding them to an LLM, agentic systems decide what to retrieve, when to re-query, and how to verify accuracy, leading to more reliable and adaptive AI applications.

In this post, we’ll break down the differences between naive RAG and agentic RAG, explore a real-world use case, and examine its benefits and limitations.

Taking context one step further

Augmenting a prompt with context is what makes LLMs actually useful—it’s the difference between a chatbot that just responds and one that understands. Imagine you’re building an internal knowledge base chatbot. With the right context, it can do more than just surface docs—it can guide employees to the right answers, freeing up support teams for bigger challenges.

For instance, a new employee could ask such a chatbot how often employee training occurs. Given the context of the company’s internal policies and training schedule, the chatbot can respond accordingly.

So let’s say the chatbot responds with how often employee training occurs, but now what? For a “naive” RAG chatbot, that might be it. While the knowledge of how often training occurs might be helpful to a new employee, it doesn’t necessarily assist them in actually signing up for and completing the training – if they even need that specific training in the first place.

What Is Agentic RAG?

Agentic RAG is a next-generation approach to retrieval-augmented generation where autonomous AI agents orchestrate the retrieval process instead of using a static pipeline.

Agentic RAG goes beyond adding context—it brings AI agents into the workflow, enabling the chatbot to take action, make decisions, and adapt dynamically.

In the case of our internal knowledge base chatbot example, an AI agent could determine if the employee needs the training based on context such as the employee’s role, responsibilities, and access permissions. Then, based on this context, the agent can perform tasks such as providing the most updated training schedule or even signing up the employee for the next session.

In other words, the agent can use context to decide what to do and has access to tools that enable it to execute on that decision.

Why Agentic RAG?

You might be asking yourself, “How is this different from traditional non-AI rule-based systems that use conditionals to determine next steps?” It is an important question to ask and the answer is this:

- Agentic RAG frees developers from having to write code for every possible “if-then.”

- Agentic RAG is not limited to just the scenarios specified by developers – it can autonomously handle cases it has never encountered before by drawing on learned data.

- Agentic RAG has the ability to learn from interactions, adapting and refining its performance over time.

While RAG improves LLM responses by reducing hallucinations and enhancing factual accuracy, it is limited to simply generating responses. Agentic RAG enables more intelligent automation that becomes more sophisticated and refined as it learns more. Agentic RAG is modular and scalable, reducing the need for human oversight.

On top of this, Mohammad Salim, VP of Cyfuture, found that “when deployed in enterprise contexts, Agentic RAG has delivered reductions in error rates of around 78% compared with traditional RAG baselines” [1].

Now that you have an understanding of the differences between naive and agentic RAG and how those differences can impact an AI-powered application, let’s dig a little deeper into these concepts and look at how we can implement them.

What is an AI agent?

While this concept and architecture is still rather new, the basic principle is this: An AI agent is a system that uses LLMs to dynamically direct their own processes and tool usage, maintaining control over how they accomplish tasks within a given environment.

This is one definition, and it is probably not a good one. The field is constantly developing and shifting. The underlying thing is that these agents should do more on their own, at different levels and with varying degrees of autonomy. Some simply follow predefined steps, while others adapt, learn, and make decisions based on new information. The key idea is that they reduce the need for human intervention, whether by automating tasks, reasoning through problems, or even collaborating with other systems to get things done.

In general, AI agents have the following characteristics:

- They are goal-oriented, working towards a predefined objective and making decisions and executing on them depending on their assigned role.

- They have tools such as access to APIs, upstream code functions, other applications, or databases that they can use to complete their objectives.

- They can be adaptable and proactive with the ability to evolve as context changes, initiating or suggesting solutions without the need for explicit instructions.

- They can be autonomous, operating independently with little to no human intervention.

- They can have contextual awareness, meaning they can perceive and interpret their environment and evolve and adapt accordingly.

In our example of an internal company knowledge base chatbot, you could have an AI agent with the role of “Employee Training Agent.”

Its objective could be to make sure employees complete all necessary training and it could be equipped with tools such as access to employee databases, the company wiki, the employee training calendar, and an API that enables the agent to sign employees up for training sessions.

The Employee Training Assistant agent could leverage an LLM to evaluate an employee’s training query, determine if the employee needs training, provide a training schedule, and assist the employee in completing any outstanding training.

Putting it all together

So how can we combine the concepts behind RAG and AI agents to create a chatbot workflow that empowers employees and frees up human resources to deal with more complex issues?

Let’s take a look.

1. Employee Initiates a Query

An employee queries an internal knowledge base chatbot: “How often does employee training occur?”

2. Router AI Agent Evaluates the Query

This query is evaluated by a Router AI Agent. The objective of the Router Agent is to evaluate the query and determine the appropriate agent to respond to the query. The Router Agent could be equipped with tools like access to an HR database and standard LLM prompting. Using context like the employee’s role, responsibilities, access, tenure, and chat history, the Router Agent decides what to do next and executes on its decision.

3. Router Agent Selects the Next Step

Let’s say that in this case, the Router Agent determines that given the employee’s role within the marketing organization and their recent start date, the Employee Training Agent is the most appropriate next step.

4. Employee Training Agent Takes Action

The Employee Training Agent has tools like access to the HR database, an employee calendar API, and a training calendar API. Without any human intervention, the Employee Training Agent not only enrolls the employee in the next brand guide training session, but also interacts with an Internal Knowledge Base Agent.

5. Internal Knowledge Base Agent Generates a Response

The Internal Knowledge Base Agent combines the employees original query, the outcomes of the Employee Training Agent, and context from the company’s internal knowledge base to prompt an LLM and generate a response.

6. Employee Receives a Personalized Answer

The employee receives the response: “Brand guide training is required for all employees in the marketing organization. The next training session is on the last Monday of the month at 10 AM PST. The training is now on your calendar. Let me know if this works for you. Is there anything else I can help you with?”

.avif)

Is agentic RAG always better than naive RAG?

While Agentic RAG enables more complex workflows, improves accuracy, and can adapt over time, it comes with trade-offs. The more AI involved, the more expensive it gets—each retrieval and generation step means paying for additional tokens. More retrieval also adds latency, so while the final output might be more refined than a naive RAG workflow, it could be too slow to be useful.

Though speed may become an issue, there are techniques like semantic caching and batch processing available for Agentic RAG that can break past these barriers. Jim Wallace from Redis exposed that advanced caching techniques provide 15x speed improvements through semantic caching, while evaluation processing can be accelerated by 50% through batch processing [2].

Complexity is something to take into account. Implementing Agentic RAG isn’t always straightforward—it can quickly become complex, requiring careful planning to justify the added cost and engineering effort.

When considering whether or not to implement agentic RAG, it is important to carefully consider your use case, objectives, and available resources. The example with the knowledge base chatbot might be better suited for a larger company in which many new hires who require different training may be starting each week. In that case, it could make sense to invest in engineering resources for this particular project. For a smaller company with fewer and less regular new hires, it might just make more sense for an employee to email HR for the training schedule.

Regardless of the use case and its context, you will need to perform evaluations of the models you use so you can adapt the performance them to your needs (and not end up wasting money!). It is important to choose an AI framework that enables you to easily make testing part of your strategy.

Agentic RAG Frameworks

Agentic RAG is still in its early days—there’s no standard playbook yet, but that doesn’t mean you can’t start experimenting.

As LLMs evolve, open-source frameworks are making agentic workflows more accessible, with better composability, integrations, and modular tooling. There are a few agentic RAG frameworks that help with this:

- Vellum AI is designed to help developers build, evaluate, and deploy AI products with a focus on modularity and extensibility. It enables seamless integration of LLMs, retrieval mechanisms, and agentic workflows, making it easier to create and refine AI products for production.

- Crew AI is best known for building AI agent teams that can collaborate to complete complex tasks and share tools among each other.

- LangGraph focuses on building AI agent workflows with a graph-based approach, enabling structured, multi-agent collaboration.

- LlamaIndex connects LLMs with external data sources, enabling efficient retrieval and structured querying.

- Swarm is OpenAI’s experimental agentic framework that emphasizes a lightweight nature in order to be more flexible.

The future of AI-powered workflows

Agentic RAG represents a significant shift in the role of AI from a passive responder to an active problem-solver. By intelligently leveraging context, executing tasks, and continuously refining its approach, agentic RAG has the potential to transform how businesses and individuals interact with artificial intelligence.

However, implementation comes with trade-offs. Latency, cost, and complexity must be carefully considered. While agentic RAG isn’t the right fit for every use case, its ability to automate repetitive processes and enhance decision-making makes it an invaluable tool for those looking to push the boundaries of AI-driven efficiency.

As AI frameworks evolve, agentic RAG will only become more powerful and accessible. Now is the time to explore how it can fit into your workflows.

The future of AI isn’t just about retrieving information; it’s about taking meaningful action.

Why choose Vellum

Agentic RAG only works in production if you can trust your pipelines, test your changes, and collaborate across technical and non-technical teammates. That’s exactly where Vellum comes in. Vellum is the AI-first workflow platform that lets teams co-build reliable, testable, and observable agentic RAG systems that scale beyond pilots.

If your goal is to move from prototype to production, without slowing collaboration, Vellum is the right fit.

What makes Vellum different

- Built-in evaluations and versioning: Test retrieval strategies, compare prompt/agent variants, and safely roll back if accuracy drops.

- End-to-end observability: Trace every agent decision and retrieval call, track performance over time, and catch regressions before users do.

- Collaboration environment: Shared canvas with comments, reviews, and human-in-the-loop steps so PMs, SMEs, and engineers can co-build agent workflows together.

- Developer depth when you need it: TypeScript/Python SDK, custom nodes, exportable code, and CI/CD hooks for engineering teams.

- Governance ready: RBAC, environments, audit logs, and secrets management to keep agentic RAG secure and compliant.

- Flexible deployment: Run in Vellum Cloud, your VPC, or on-prem so sensitive data never leaves your environment.

- AI-native primitives: Retrieval, semantic routing, tool use, and multi-agent orchestration are first-class citizens in Vellum.

When Vellum is the best fit for agentic RAG

- Your team mixes engineers and non-technical experts who need to build and monitor retrieval workflows without breaking reliability.

- You’re building AI assistants or agents that use retrieval, run across multiple steps, and must be tracked, evaluated, and improved as they scale.

- You want every change to be backed by testing and monitoring, so your Agentic RAG systems evolve based on data, not guesswork.

Ready to build agent RAG on Vellum?

Start free today and see how Vellum’s scalable infrastructure, built-in evaluations, and collaboration tools help you turn agentic RAG from pilot to production-grade system.

Get started with Vellum free →

FAQs

1) What is Agentic RAG in simple terms?

Agentic RAG is an advanced form of Retrieval-Augmented Generation where autonomous agents guide retrieval, check results, and refine queries to improve accuracy.

2) How is Agentic RAG different from traditional RAG?

Traditional RAG pulls results from a single query, while Agentic RAG uses multiple reasoning agents that can adapt, re-query, and evaluate results before passing them to the LLM.

3) Why does Agentic RAG matter for enterprises?

It reduces hallucinations, improves trust, and aligns retrieval workflows with how humans validate information, which is critical for regulated industries.

4) How does Agentic RAG reduce hallucinations?

Evaluator agents check retrieved sources for relevance and can trigger additional queries if information is missing, ensuring the LLM only sees vetted context.

5) Is Agentic RAG more expensive to run?

Yes, because it makes multiple retrieval calls, but the added accuracy reduces downstream costs like human review and error correction.

6) What industries benefit most from Agentic RAG?

Legal tech, healthcare, finance, and any domain where accuracy, compliance, or up-to-date knowledge is mission-critical.

7) Can Agentic RAG be combined with other RAG methods like Graph RAG?

Yes. Agentic RAG can orchestrate different retrieval strategies (vector search, graph search, hybrid) depending on the query.

8) How does Agentic RAG handle ambiguous queries?

Planner agents break down the query, reframe it if needed, and issue multiple retrieval passes until enough context is gathered.

9) What tools or frameworks support Agentic RAG?

Frameworks like LangChain, LlamaIndex, but full-stack platforms like Vellum make it easier to implement Agentic RAG pipelines without building orchestration logic from scratch.

10) Does Agentic RAG improve reliability in production?

Yes. Because agents can adapt dynamically, Agentic RAG is more resilient to missing documents, shifting data sources, and noisy retrieval. Platforms like Vellum add monitoring and evaluation features so teams can see where retrieval is failing and fix it fast.

11) How does Agentic RAG align with human reasoning?

It mirrors how people research: plan → search → validate → refine. This makes AI outputs feel more trustworthy and context-aware.

12) What are the risks of Agentic RAG?

It introduces more complexity, requires careful orchestration, and may add latency if not optimized. Tools like Vellum help manage this complexity by providing orchestration, observability, and guardrails in one place.

13) How does Agentic RAG support compliance?

Agents can enforce guardrails such as sourcing only from approved databases, requiring citations, or flagging unverifiable content. Vellum makes this practical by letting teams enforce retrieval policies and audit trails directly in production workflows.

14) How can my team start experimenting with Agentic RAG?

Teams can prototype with open frameworks, but production use often benefits from a platform like Vellum that handles orchestration, monitoring, and compliance out of the box.

Extra Resources

- 2025 Guide to AI Agent Workflows →

- Ultimate LLM Agent Build Guide →

- Understanding agentic behavior in production →

- How the best teams ship AI solutions →

- How Drata built an enterprise-grade AI solution →

Citations

[1] Cyfuture. 2025. How Agentic RAG Cut Error Rates by 78% in Real-World Tests.

[2] Redis. 2025. Agentic RAG: How Enterprises Are Surmounting the Limits of Traditional RAG.

Latest AI news, tips, and techniques

Specific tips for Your AI use cases

No spam

Each issue is packed with valuable resources, tools, and insights that help us stay ahead in AI development. We've discovered strategies and frameworks that boosted our efficiency by 30%, making it a must-read for anyone in the field.

This is just a great newsletter. The content is so helpful, even when I’m busy I read them.

Experiment, Evaluate, Deploy, Repeat.

AI development doesn’t end once you've defined your system. Learn how Vellum helps you manage the entire AI development lifecycle.

.png)