We know that AI development isn’t a rigid, one-size-fits-all process; it’s highly iterative, with needs that can change from project to project.

This flexibility is especially critical as AI tech evolves and new data sources, orchestration techniques, and business requirements emerge. With Vellum, our goal is to give engineering and product teams the tools to adapt quickly, ensuring that your orchestration setup can grow with your needs.

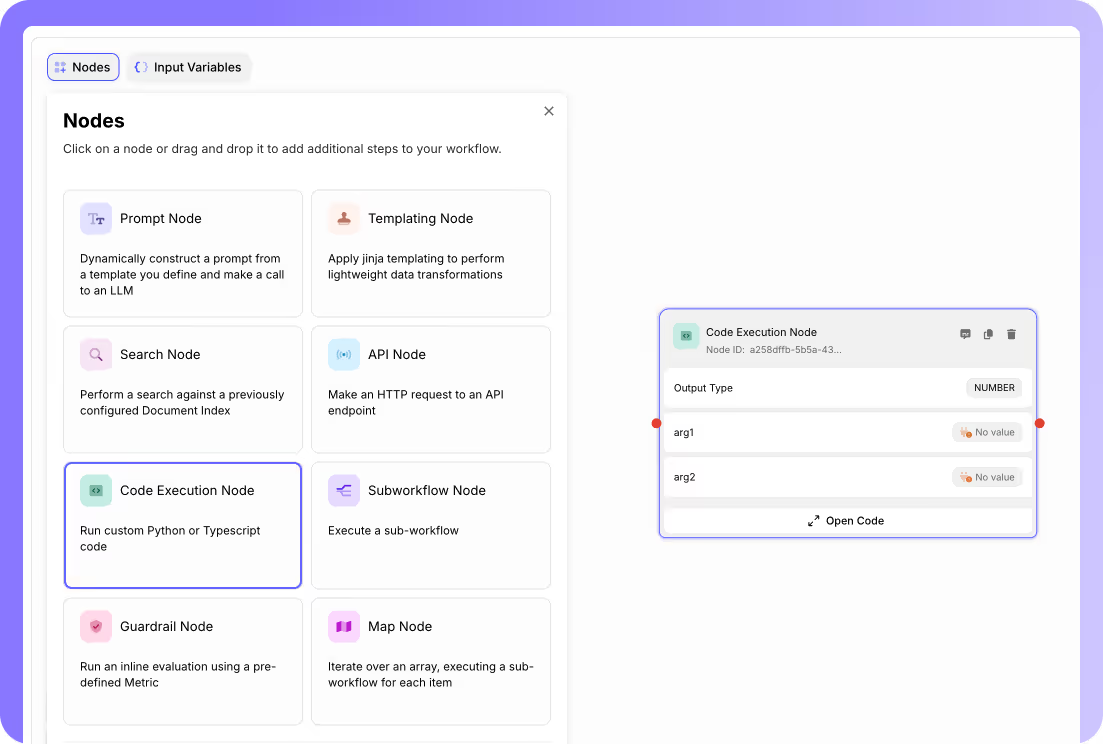

While our out-of-the-box nodes like RAG, Map node, model connectors, guardrails, error nodes (and 10 other Nodes!) are designed to cover common needs, we recognize that real-world AI applications often demand more. You might need to bring in a unique data feed, build out a specialized evaluation metric, or apply complex, project-specific logic that goes beyond standard integrations.

That’s why we built custom code execution nodes into Vellum’s platform. With these nodes, you can write and execute Python or TypeScript directly in your workflow, extending your build beyond pre-set functions while staying within Vellum’s visual builder.

Here’s a closer look at what makes this feature so versatile.

Arbitrary Code Execution in Workflows

Imagine this: You’re setting up a workflow to give users up-to-date weather info.

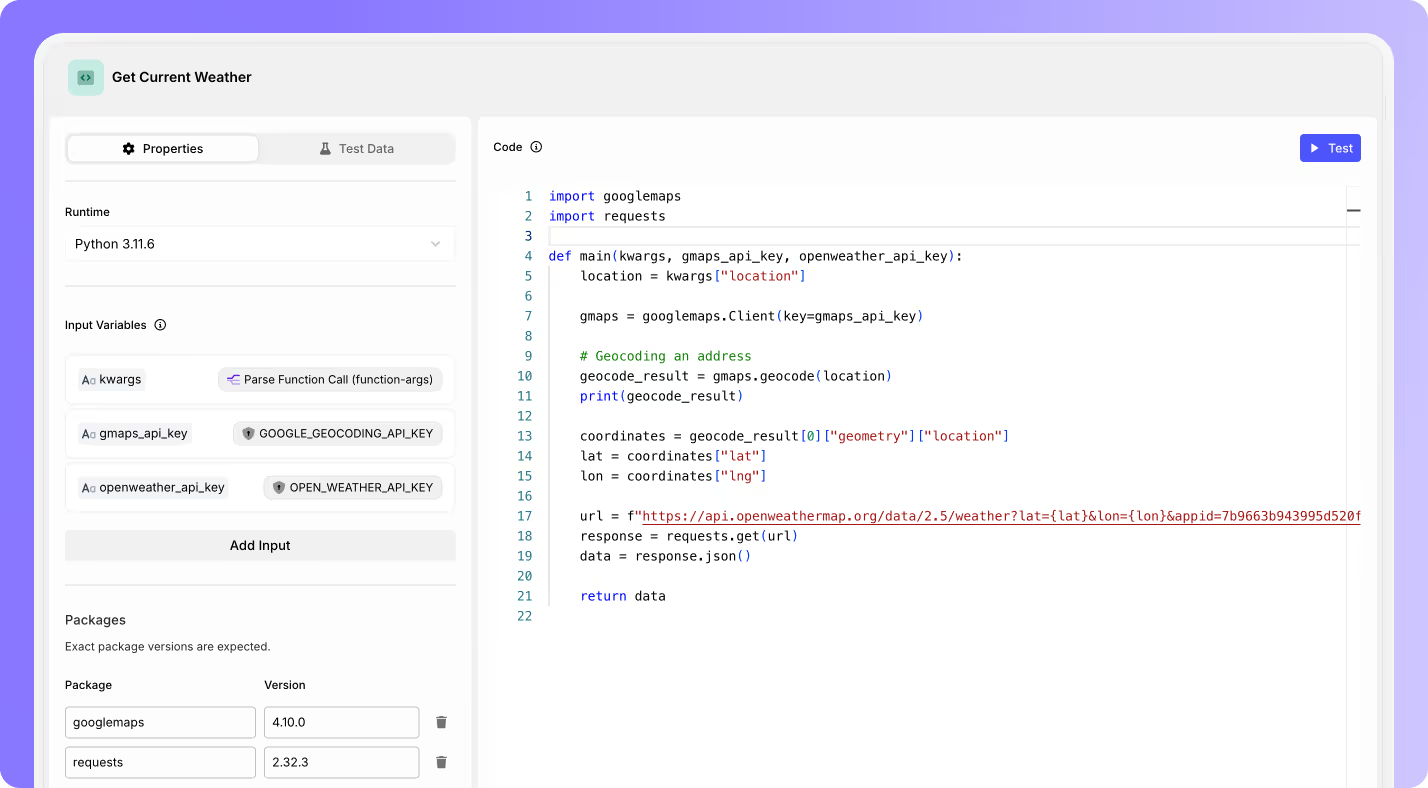

You’ve got the basic workflow configured in Vellum, but you need it to call APIs, like Google Maps for coordinates and OpenWeather for the forecast, then return formatted data to your users. Out of the box, that’s complicated to manage without custom code.

With Vellum’s code execution nodes, you’re now able to write custom code in Python and TypeScript and build and test this functionality in our in-browser IDE.

Here’s what else you can do:

Importing Third-Party Packages

With support for any public package from PyPI and npm, you’re not restricted to standard library functions. Import packages like requests for HTTP requests or googlemaps for location data, and integrate them seamlessly into your workflow.

Securely Referencing Secrets

Vellum provides a secure secret store, allowing you to reference secrets in your code (e.g. API credentials) without inlining their literal values. Find more details on this link on how to set up and use secrets in Vellum.

In-Browser Testing and Debugging

Test your custom logic on the spot.

With Vellum’s in-browser IDE, you can write, test, and debug code within the platform—no need for a separate environment or complex deployment process. Test runs show output logs immediately, so you can troubleshoot quickly and confidently.

Vellum’s code execution also extends to evaluation metrics, where you can write arbitrary code to create custom metrics to evaluate model responses.

Building reusable eval metrics

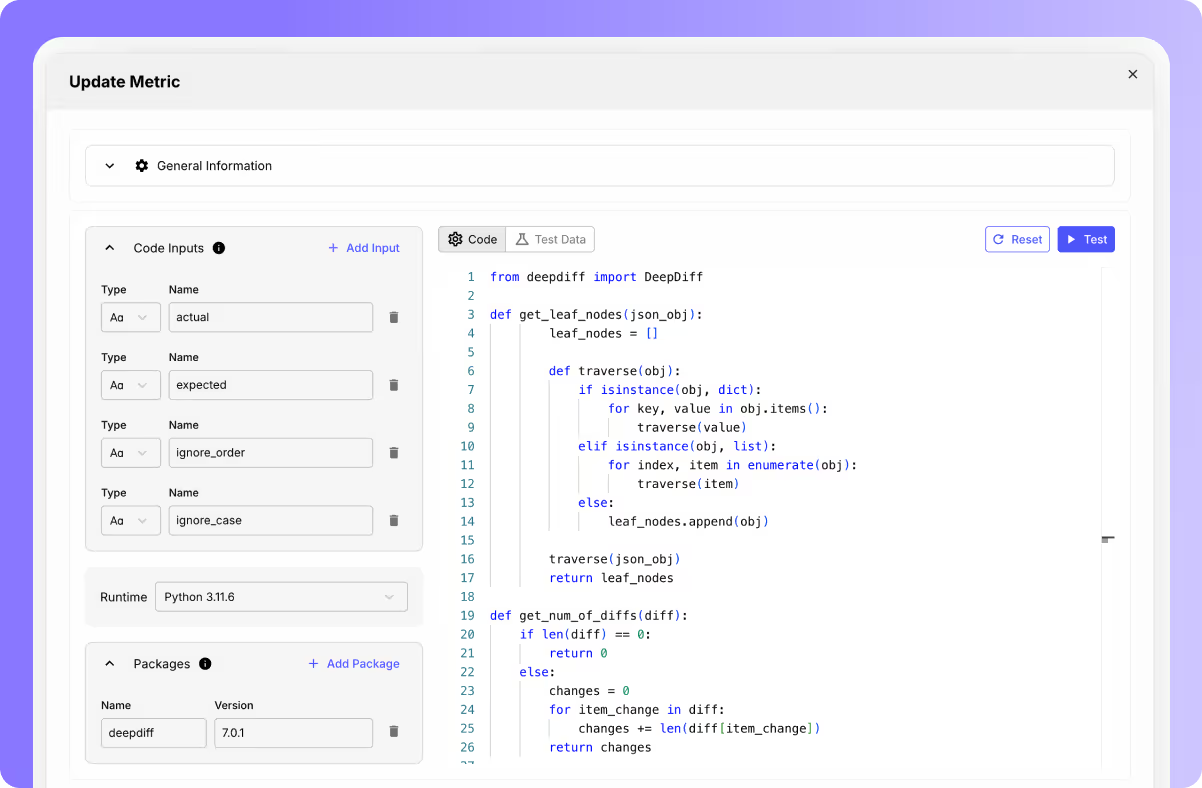

Let’s say that you want to create an eval metric that measures the percentage difference between two JSON objects by comparing their structure and content. You can use the deepdiff package, a couple of inputs, and this Python code:

from deepdiff import DeepDiff

def get_leaf_nodes(json_obj):

leaf_nodes = []

def traverse(obj):

if isinstance(obj, dict):

for key, value in obj.items():

traverse(value)

elif isinstance(obj, list):

for index, item in enumerate(obj):

traverse(item)

else:

leaf_nodes.append(obj)

traverse(json_obj)

return leaf_nodes

def get_num_of_diffs(diff):

if len(diff) == 0:

return 0

else:

changes = 0

for item_change in diff:

changes += len(diff[item_change])

return changes

def compare_func(x, y, level):

if (not isinstance(x, dict) or not isinstance(y, dict)):

raise CannotCompare

if(level.path() == "root['items_in_receipt']"):

if (x["name"] == y["name"]):

return True

return False

def main(

actual,

expected,

ignore_order=True,

ignore_case=True

) -> dict:

"""Produces a dict containing at least a "score" key with a numerical value."""

# Convert expected string to JSON object

actual = json.loads(actual)

expected = json.loads(expected)

diff = DeepDiff(actual, expected, ignore_order=ignore_order, ignore_string_case=ignore_case)

print(diff)

diffs = get_num_of_diffs(diff)

nodes_a = get_leaf_nodes(actual)

nodes_e = get_leaf_nodes(expected)

longest_json = max(len(nodes_a), len(nodes_e))

score = (longest_json-diffs)/longest_json * 100

return {

"score": score,

}

Once you add all this info, here’s what the Code Execution Node for this metric will look like:

This is ideal for teams focused on ML and AI who need consistent evaluation standards.

Imagine writing dozens of script like these — then centralizing them as “blessed” standards your team can reuse across different workflows, ensuring consistency and saving time.

How to Get Started

To start using Code Execution Nodes in your Workflows, simply select the Node in your Workflow builder, and start defining and testing your arbitrary code:

Start by scripting out the logic, import necessary packages, and reference any secrets securely. Testing is simple, with in-browser testing allowing you to confirm the output in real-time. From there, you’re set to deploy within workflows or evaluations, ensuring smooth, consistent performance. The best place to start is to check our docs on code execution examples.

If you want to define a custom metric using the Code Execution Node, you can follow the tutorial outlined on this link.

Try Vellum Workflows today

With Vellum’s code execution nodes, you gain the freedom to build workflows your way—whether that’s connecting to external APIs, integrating complex business logic, or defining custom evaluation metrics.

If your team wants to try out Vellum’s custom code execution and our visual builder, now’s the perfect time to explore how this flexibility can support your projects. Contact us on this link and we’ll have one of our AI experts help you setup your project.

Latest AI news, tips, and techniques

Specific tips for Your AI use cases

No spam

Each issue is packed with valuable resources, tools, and insights that help us stay ahead in AI development. We've discovered strategies and frameworks that boosted our efficiency by 30%, making it a must-read for anyone in the field.

This is just a great newsletter. The content is so helpful, even when I’m busy I read them.

.png)